親に知ってほしい受験勉強

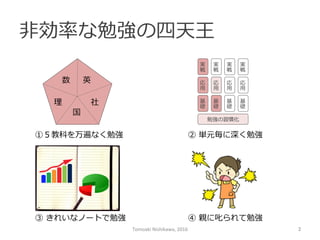

- 2. ⾮非効率率率な勉強の四天王 数 英 国 社理理 実 戦 応 ⽤用 基 礎 勉強の習慣化 実 戦 応 ⽤用 基 礎 実 戦 応 ⽤用 基 礎 実 戦 応 ⽤用 基 礎 ①5教科を万遍なく勉強 ② 単元毎に深く勉強 ④ 親に叱られて勉強③ きれいなノートで勉強 Tomoaki Nishikawa, 2016 2

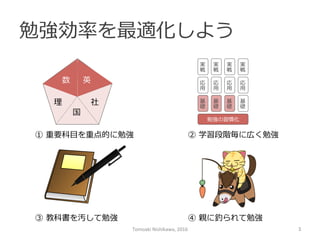

- 3. 勉強効率率率を最適化しよう 実 戦 応 ⽤用 基 礎 勉強の習慣化 実 戦 応 ⽤用 基 礎 実 戦 応 ⽤用 基 礎 実 戦 応 ⽤用 基 礎 ① 重要科⽬目を重点的に勉強 ② 学習段階毎に広く勉強 ④ 親に釣られて勉強③ 教科書を汚して勉強 国 社理理 数 英 Tomoaki Nishikawa, 2016 3

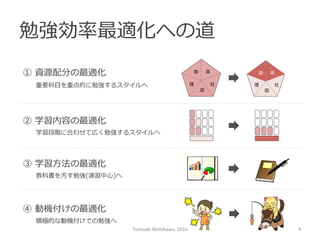

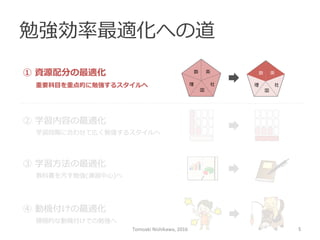

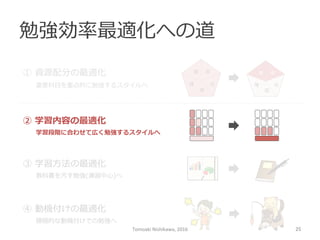

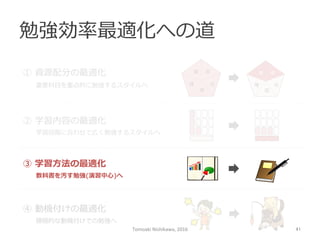

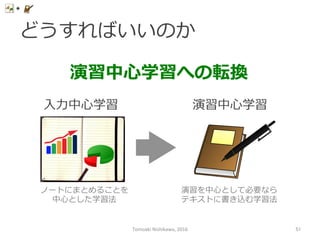

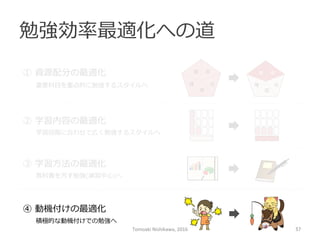

- 4. 勉強効率率率最適化への道 数 英 国 社理理 国 社理理 数 英① 資源配分の最適化 重要科⽬目を重点的に勉強するスタイルへ ② 学習内容の最適化 学習段階に合わせて広く勉強するスタイルへ ③ 学習⽅方法の最適化 教科書を汚す勉強(演習中⼼心)へ ④ 動機付けの最適化 積極的な動機付けでの勉強へ Tomoaki Nishikawa, 2016 4

- 5. 勉強効率率率最適化への道 数 英 国 社理理 国 社理理 数 英① 資源配分の最適化 重要科⽬目を重点的に勉強するスタイルへ ② 学習内容の最適化 学習段階に合わせて広く勉強するスタイルへ ③ 学習⽅方法の最適化 教科書を汚す勉強(演習中⼼心)へ ④ 動機付けの最適化 積極的な動機付けでの勉強へ Tomoaki Nishikawa, 2016 5

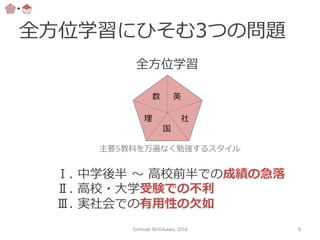

- 6. 全⽅方位学習にひそむ3つの問題 主要5教科を万遍なく勉強するスタイル Ⅰ. 中学後半 〜~ ⾼高校前半での成績の急落落 Ⅱ. ⾼高校・⼤大学受験での不不利利 Ⅲ. 実社会での有⽤用性の⽋欠如 数 英 国 社理理 全⽅方位学習 Tomoaki Nishikawa, 2016 6

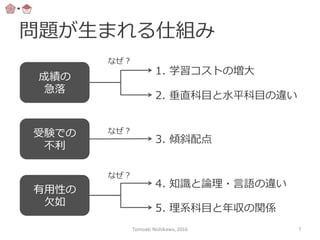

- 7. 問題が⽣生まれる仕組み 成績の 急落落 受験での 不不利利 有⽤用性の ⽋欠如 1. 学習コストの増⼤大 2. 垂直科⽬目と⽔水平科⽬目の違い なぜ? 3. 傾斜配点 なぜ? 5. 理理系科⽬目と年年収の関係 4. 知識識と論論理理・⾔言語の違い なぜ? Tomoaki Nishikawa, 2016 7

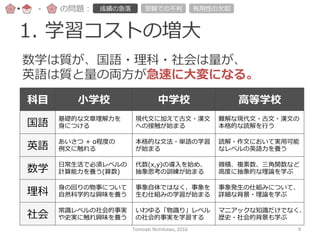

- 8. 1. 学習コストの増⼤大 数学は質が、国語・理理科・社会は量量が、 英語は質と量量の両⽅方が急速に⼤大変になる。 科⽬目 ⼩小学校 中学校 ⾼高等学校 国語 基礎的な⽂文章理理解⼒力力を ⾝身につける 現代⽂文に加えて古⽂文・漢⽂文 への接触が始まる 難解な現代⽂文・古⽂文・漢⽂文の 本格的な読解を⾏行行う 英語 あいさつ + α程度度の 例例⽂文に触れる 本格的な⽂文法・単語の学習 が始まる 読解・作⽂文において実⽤用可能 なレベルの英語⼒力力を養う 数学 ⽇日常⽣生活で必須レベルの 計算能⼒力力を養う(算数) 代数(x,y)の導⼊入を始め、 抽象思考の訓練が始まる 微積、複素数、三⾓角関数など ⾼高度度に抽象的な理理論論を学ぶ 理理科 ⾝身の回りの物事について ⾃自然科学的な興味を養う 事象⾃自体ではなく、事象を ⽣生む仕組みの学習が始まる 事象発⽣生の仕組みについて、 詳細な背景・理理論論を学ぶ 社会 常識識レベルの社会的事実 や史実に触れ興味を養う いわゆる「物識識り」レベル の社会的事実を学習する マニアックな知識識だけでなく、 歴史・社会的背景も学ぶ -‐‑‒ の問題: 成績の急落落 受験での不不利利 有⽤用性の⽋欠如 Tomoaki Nishikawa, 2016 8

- 9. 垂直科⽬目 ⽔水平科⽬目 数学・英語・理理科(物理理) 社会・理理科(物理理以外)・国語 過去の段階をきちんと理理解していないと、 現在の学習内容も理理解が難しい科⽬目 過去の学習理理解が不不完全であっても、 現在の学習内容は問題なく理理解できる科⽬目 2. 垂直科⽬目と⽔水平科⽬目 微積分 ⾼高次⽅方程式 乗法公式・ 因数分解 鎌倉時代 室町時代 戦国時代 点数UPに時間がかかる すぐに点数UPできる -‐‑‒ の問題: 成績の急落落 受験での不不利利 有⽤用性の⽋欠如 Tomoaki Nishikawa, 2016 9

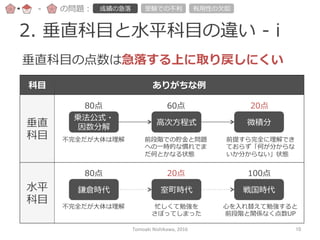

- 10. 2. 垂直科⽬目と⽔水平科⽬目の違い -‐‑‒ i 科⽬目 ありがちな例例 垂直 科⽬目 ⽔水平 科⽬目 乗法公式・ 因数分解 ⾼高次⽅方程式 微積分 垂直科⽬目の点数は急落落する上に取り戻しにくい 80点 60点 20点 不不完全だが⼤大体は理理解 前段階での貯⾦金金と問題 への⼀一時的な慣れでま だ何とかなる状態 前提すら完全に理理解でき ておらず「何が分からな いか分からない」状態 鎌倉時代 室町時代 戦国時代 80点 20点 100点 不不完全だが⼤大体は理理解 忙しくて勉強を さぼってしまった ⼼心を⼊入れ替えて勉強すると 前段階と関係なく点数UP -‐‑‒ の問題: 成績の急落落 受験での不不利利 有⽤用性の⽋欠如 Tomoaki Nishikawa, 2016 10

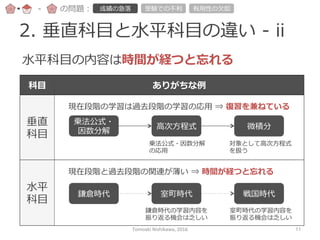

- 11. 2. 垂直科⽬目と⽔水平科⽬目の違い -‐‑‒ ii 科⽬目 ありがちな例例 垂直 科⽬目 ⽔水平 科⽬目 乗法公式・ 因数分解 ⾼高次⽅方程式 微積分 ⽔水平科⽬目の内容は時間が経つと忘れる 乗法公式・因数分解 の応⽤用 対象として⾼高次⽅方程式 を扱う 鎌倉時代 室町時代 戦国時代 鎌倉時代の学習内容を 振り返る機会は乏しい 室町時代の学習内容を 振り返る機会は乏しい 現在段階の学習は過去段階の学習の応⽤用 ⇒ 復復習を兼ねている 現在段階と過去段階の関連が薄い ⇒ 時間が経つと忘れる -‐‑‒ の問題: 成績の急落落 受験での不不利利 有⽤用性の⽋欠如 Tomoaki Nishikawa, 2016 11

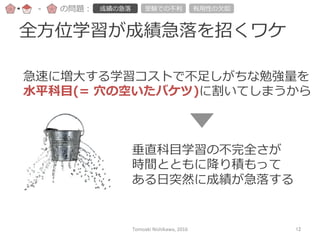

- 12. 全⽅方位学習が成績急落落を招くワケ 急速に増⼤大する学習コストで不不⾜足しがちな勉強量量を ⽔水平科⽬目(= ⽳穴の空いたバケツ)に割いてしまうから 垂直科⽬目学習の不不完全さが 時間とともに降降り積もって ある⽇日突然に成績が急落落する -‐‑‒ の問題: 成績の急落落 受験での不不利利 有⽤用性の⽋欠如 Tomoaki Nishikawa, 2016 12

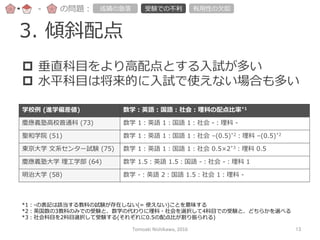

- 13. 3. 傾斜配点 -‐‑‒ の問題: 成績の急落落 受験での不不利利 有⽤用性の⽋欠如 p 垂直科⽬目をより⾼高配点とする⼊入試が多い p ⽔水平科⽬目は将来的に⼊入試で使えない場合も多い 学校例例 (進学偏差値) 数学:英語:国語:社会:理理科の配点⽐比率率率*1 慶應義塾⾼高校普通科 (73) 数学 1:英語 1:国語 1:社会 -‐‑‒:理理科 -‐‑‒ 聖和学院 (51) 数学 1:英語 1:国語 1:社会 –(0.5)*2:理理科 –(0.5)*2 東京⼤大学 ⽂文系センター試験 (75) 数学 1:英語 1:国語 1:社会 0.5×2*3:理理科 0.5 慶應義塾⼤大学 理理⼯工学部 (64) 数学 1.5:英語 1.5:国語 -‐‑‒:社会 -‐‑‒:理理科 1 明治⼤大学 (58) 数学 -‐‑‒:英語 2:国語 1.5:社会 1:理理科 -‐‑‒ *1:-‐‑‒の表記は該当する教科の試験が存在しない(= 使えない)ことを意味する *2:英国数の3教科のみでの受験と、数学の代わりに理理科・社会を選択して4科⽬目での受験と、どちらかを選べる *3:社会科⽬目を2科⽬目選択して受験する(それぞれに0.5の配点⽐比が割り振られる) Tomoaki Nishikawa, 2016 13

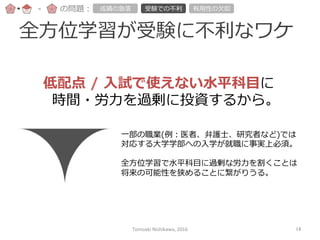

- 14. 全⽅方位学習が受験に不不利利なワケ -‐‑‒ の問題: 成績の急落落 受験での不不利利 有⽤用性の⽋欠如 低配点 / ⼊入試で使えない⽔水平科⽬目に 時間・労⼒力力を過剰に投資するから。 ⼀一部の職業(例例:医者、弁護⼠士、研究者など)では 対応する⼤大学学部への⼊入学が就職に事実上必須。 全⽅方位学習で⽔水平科⽬目に過剰な労⼒力力を割くことは 将来の可能性を狭めることに繋がりうる。 Tomoaki Nishikawa, 2016 14

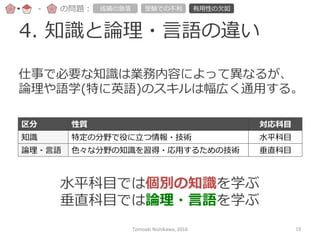

- 15. 4. 知識識と論論理理・⾔言語の違い -‐‑‒ の問題: 成績の急落落 受験での不不利利 有⽤用性の⽋欠如 仕事で必要な知識識は業務内容によって異異なるが、 論論理理や語学(特に英語)のスキルは幅広く通⽤用する。 区分 性質 対応科⽬目 知識識 特定の分野で役に⽴立立つ情報・技術 ⽔水平科⽬目 論論理理・⾔言語 ⾊色々な分野の知識識を習得・応⽤用するための技術 垂直科⽬目 ⽔水平科⽬目では個別の知識識を学ぶ 垂直科⽬目では論論理理・⾔言語を学ぶ Tomoaki Nishikawa, 2016 15

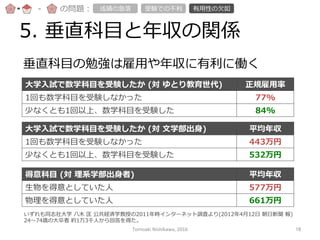

- 16. 5. 垂直科⽬目と年年収の関係 -‐‑‒ の問題: 成績の急落落 受験での不不利利 有⽤用性の⽋欠如 垂直科⽬目の勉強は雇⽤用や年年収に有利利に働く ⼤大学⼊入試で数学科⽬目を受験したか (対 ゆとり教育世代) 正規雇⽤用率率率 1回も数学科⽬目を受験しなかった 77% 少なくとも1回以上、数学科⽬目を受験した 84% ⼤大学⼊入試で数学科⽬目を受験したか (対 ⽂文学部出⾝身) 平均年年収 1回も数学科⽬目を受験しなかった 443万円 少なくとも1回以上、数学科⽬目を受験した 532万円 得意科⽬目 (対 理理系学部出⾝身者) 平均年年収 ⽣生物を得意としていた⼈人 577万円 物理理を得意としていた⼈人 661万円 いずれも同志社⼤大学 ⼋八⽊木 匡 公共経済学教授の2011年年時インターネット調査より(2012年年4⽉月12⽇日 朝⽇日新聞 報) 24〜~74歳の⼤大卒者 約1万3千⼈人から回答を得た。 Tomoaki Nishikawa, 2016 16

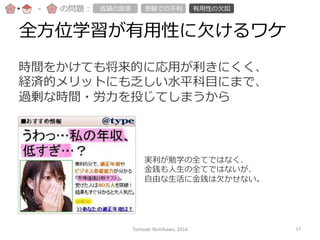

- 17. 全⽅方位学習が有⽤用性に⽋欠けるワケ -‐‑‒ の問題: 成績の急落落 受験での不不利利 有⽤用性の⽋欠如 時間をかけても将来的に応⽤用が利利きにくく、 経済的メリットにも乏しい⽔水平科⽬目にまで、 過剰な時間・労⼒力力を投じてしまうから 実利利が勉学の全てではなく、 ⾦金金銭も⼈人⽣生の全てではないが、 ⾃自由な⽣生活に⾦金金銭は⽋欠かせない。 Tomoaki Nishikawa, 2016 17

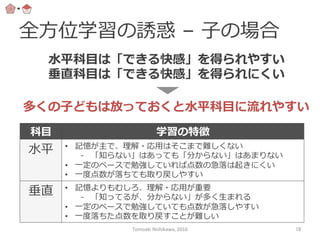

- 18. 全⽅方位学習の誘惑 – ⼦子の場合 科⽬目 学習の特徴 ⽔水平 • 記憶が主で、理理解・応⽤用はそこまで難しくない - 「知らない」はあっても「分からない」はあまりない • ⼀一定のペースで勉強していれば点数の急落落は起きにくい • ⼀一度度点数が落落ちても取り戻しやすい 垂直 • 記憶よりもむしろ、理理解・応⽤用が重要 - 「知ってるが、分からない」が多く⽣生まれる • ⼀一定のペースで勉強していても点数が急落落しやすい • ⼀一度度落落ちた点数を取り戻すことが難しい ⽔水平科⽬目は「できる快感」を得られやすい 垂直科⽬目は「できる快感」を得られにくい 多くの⼦子どもは放っておくと⽔水平科⽬目に流流れやすい Tomoaki Nishikawa, 2016 18

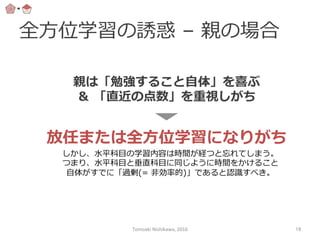

- 19. 全⽅方位学習の誘惑 – 親の場合 親は「勉強すること⾃自体」を喜ぶ & 「直近の点数」を重視しがち 放任または全⽅方位学習になりがち しかし、⽔水平科⽬目の学習内容は時間が経つと忘れてしまう。 つまり、⽔水平科⽬目と垂直科⽬目に同じように時間をかけること ⾃自体がすでに「過剰(= ⾮非効率率率的)」であると認識識すべき。 Tomoaki Nishikawa, 2016 19

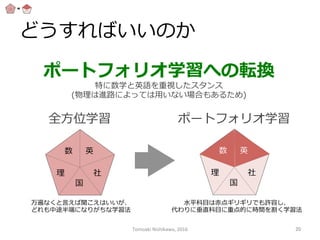

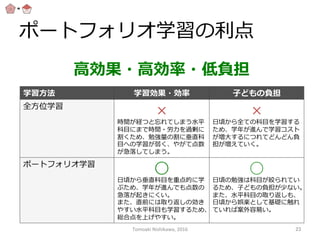

- 20. どうすればいいのか ポートフォリオ学習への転換 特に数学と英語を重視したスタンス (物理理は進路路によっては⽤用いない場合もあるため) 数 英 国 社理理 全⽅方位学習 国 社理理 数 英 ポートフォリオ学習 万遍なくと⾔言えば聞こえはいいが、 どれも中途半端になりがちな学習法 ⽔水平科⽬目は⾚赤点ギリギリでも許容し、 代わりに垂直科⽬目に重点的に時間を割く学習法 Tomoaki Nishikawa, 2016 20

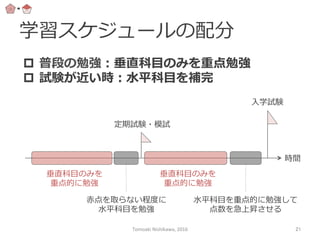

- 21. 学習スケジュールの配分 p 普段の勉強:垂直科⽬目のみを重点勉強 p 試験が近い時:⽔水平科⽬目を補完 時間 定期試験・模試 ⼊入学試験 垂直科⽬目のみを 重点的に勉強 垂直科⽬目のみを 重点的に勉強 ⾚赤点を取らない程度度に ⽔水平科⽬目を勉強 ⽔水平科⽬目を重点的に勉強して 点数を急上昇させる Tomoaki Nishikawa, 2016 21

- 25. 勉強効率率率最適化への道 数 英 国 社理理 国 社理理 数 英① 資源配分の最適化 重要科⽬目を重点的に勉強するスタイルへ ② 学習内容の最適化 学習段階に合わせて広く勉強するスタイルへ ③ 学習⽅方法の最適化 教科書を汚す勉強(演習中⼼心)へ ④ 動機付けの最適化 積極的な動機付けでの勉強へ Tomoaki Nishikawa, 2016 25

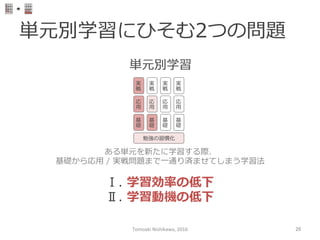

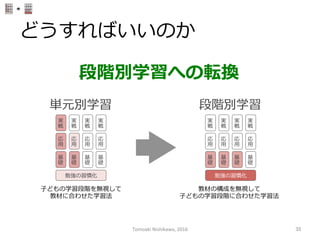

- 26. 単元別学習にひそむ2つの問題 実 戦 応 ⽤用 基 礎 勉強の習慣化 実 戦 応 ⽤用 基 礎 実 戦 応 ⽤用 基 礎 実 戦 応 ⽤用 基 礎 ある単元を新たに学習する際、 基礎から応⽤用 / 実戦問題まで⼀一通り済ませてしまう学習法 単元別学習 Ⅰ. 学習効率率率の低下 Ⅱ. 学習動機の低下 Tomoaki Nishikawa, 2016 26

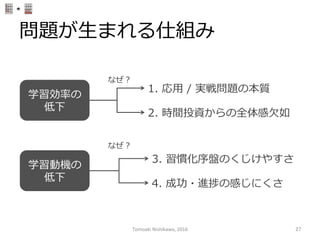

- 27. 問題が⽣生まれる仕組み 学習効率率率の 低下 学習動機の 低下 1. 応⽤用 / 実戦問題の本質 なぜ? 3. 習慣化序盤のくじけやすさ なぜ? 2. 時間投資からの全体感⽋欠如 4. 成功・進捗の感じにくさ Tomoaki Nishikawa, 2016 27

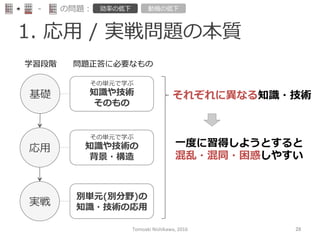

- 28. 1. 応⽤用 / 実戦問題の本質 -‐‑‒ の問題: 効率率率の低下 動機の低下 その単元で学ぶ 知識識や技術 そのもの その単元で学ぶ 知識識や技術の 背景・構造 別単元(別分野)の 知識識・技術の応⽤用 基礎 応⽤用 実戦 学習段階 問題正答に必要なもの それぞれに異異なる知識識・技術 ⼀一度度に習得しようとすると 混乱・混同・困惑しやすい Tomoaki Nishikawa, 2016 28

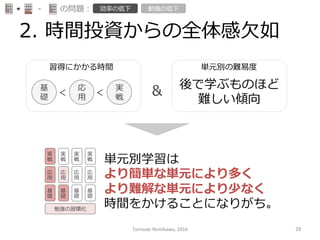

- 29. 2. 時間投資からの全体感⽋欠如 習得にかかる時間 基 礎 応 ⽤用 実 戦 < < 単元別の難易易度度 後で学ぶものほど 難しい傾向 & 実 戦 応 ⽤用 基 礎 勉強の習慣化 実 戦 応 ⽤用 基 礎 実 戦 応 ⽤用 基 礎 実 戦 応 ⽤用 基 礎 単元別学習は より簡単な単元により多く より難解な単元により少なく 時間をかけることになりがち。 -‐‑‒ の問題: 効率率率の低下 動機の低下 Tomoaki Nishikawa, 2016 29

- 30. 学習効率率率が低下するワケ 地図も計画もなく⼤大冒険に ⾶飛び出すようなものだから 波乱万丈は期待できるだろうが効率率率性は求むべくもない -‐‑‒ の問題: 効率率率の低下 動機の低下 Tomoaki Nishikawa, 2016 30

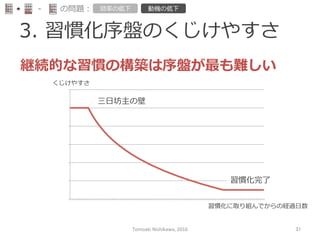

- 31. 3. 習慣化序盤のくじけやすさ -‐‑‒ の問題: 効率率率の低下 動機の低下 0 0.2 0.4 0.6 0.8 1 1.2 1 4 7 10 13 16 19 22 25 28 31 34 37 40 43 46 49 52 55 58 61 64 67 70 73 76 79 82 85 88 91 94 97 100 くじけやすさ 習慣化に取り組んでからの経過⽇日数 三⽇日坊主の壁 習慣化完了了 継続的な習慣の構築は序盤が最も難しい Tomoaki Nishikawa, 2016 31

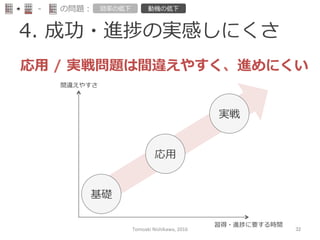

- 32. 4. 成功・進捗の実感しにくさ -‐‑‒ の問題: 効率率率の低下 動機の低下 応⽤用 / 実戦問題は間違えやすく、進めにくい 間違えやすさ 習得・進捗に要する時間 基礎 応⽤用 実戦 Tomoaki Nishikawa, 2016 32

- 33. 学習動機が低下するワケ -‐‑‒ の問題: 効率率率の低下 動機の低下 未熟な時にこそ⼿手応えがないと、 ⼈人の⼼心は簡単に折れてしまうものだから Tomoaki Nishikawa, 2016 33

- 34. 単元別学習の誘惑 p 多くの教材が単元別学習向けに構成 p 直近のテストの点数向上に有利利なのは単元別学習 Tomoaki Nishikawa, 2016 34

- 36. 学習ステップの⼤大まかな構成 基礎 受験に必要な領領域の最後まで 知識識・技術の記憶と基礎問題 の演習・復復習を⾏行行う 応⽤用 やること 狙い p 知識識・技術の記憶・定着 p 勉強習慣の確⽴立立 受験に必要な領領域の最後まで 応⽤用問題の演習・復復習を⾏行行う p 知識識・技術の構造的理理解 p 得意・不不得意分野の把握 実戦 志望校の出題傾向と⾃自分の得 意分野を踏まえて重点的に実 戦問題演習を⾏行行う p 効率率率的な得点の上積み p 分野をまたいだ知識識転⽤用 経験の蓄積 Tomoaki Nishikawa, 2016 36

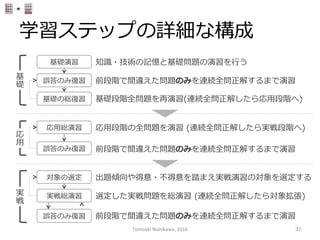

- 37. 学習ステップの詳細な構成 知識識・技術の記憶と基礎問題の演習を⾏行行う基礎演習 誤答のみ復復習 前段階で間違えた問題のみを連続全問正解するまで演習 基礎の総復復習 基礎段階全問題を再演習(連続全問正解したら応⽤用段階へ) 基 礎 応⽤用総演習 応⽤用段階の全問題を演習 (連続全問正解したら実戦段階へ) 誤答のみ復復習 前段階で間違えた問題のみを連続全問正解するまで演習 応 ⽤用 対象の選定 実戦総演習 誤答のみ復復習 実 戦 前段階で間違えた問題のみを連続全問正解するまで演習 出題傾向や得意・不不得意を踏まえ実戦演習の対象を選定する 選定した実戦問題を総演習 (連続全問正解したら対象拡張) Tomoaki Nishikawa, 2016 37

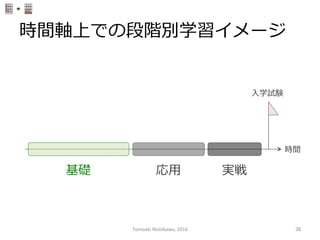

- 38. 時間軸上での段階別学習イメージ 時間 ⼊入学試験 基礎 応⽤用 実戦 Tomoaki Nishikawa, 2016 38

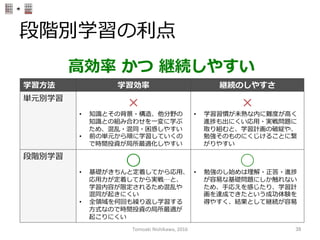

- 39. 段階別学習の利利点 学習⽅方法 学習効率率率 継続のしやすさ 単元別学習 × • 知識識とその背景・構造、他分野の 知識識との組み合わせを⼀一変に学ぶ ため、混乱・混同・困惑しやすい • 前の単元から順に学習していくの で時間投資が局所最適化しやすい × • 学習習慣が未熟な内に難度度が⾼高く 進捗も出にくい応⽤用・実戦問題に 取り組むと、学習計画の破綻や、 勉強そのものにくじけることに繋 がりやすい 段階別学習 ◯ • 基礎がきちんと定着してから応⽤用、 応⽤用⼒力力が定着してから実戦…と、 学習内容が限定されるため混乱や 混同が起きにくい • 全領領域を何回も繰り返し学習する ⽅方式なので時間投資の局所最適が 起こりにくい ◯ • 勉強のし始めは理理解・正答・進捗 が容易易な基礎問題にしか触れない ため、⼿手応えを感じたり、学習計 画を達成できたという成功体験を 得やすく、結果として継続が容易易 ⾼高効率率率 かつ 継続しやすい Tomoaki Nishikawa, 2016 39

- 40. まずは継続しやすさを意識識して… 当たり前のように勉強する⼦子へ! 勉強ができる⼦子とできない⼦子の最⼤大の違い = 継続して勉強をしているかいないか Tomoaki Nishikawa, 2016 40

- 41. 勉強効率率率最適化への道 数 英 国 社理理 国 社理理 数 英① 資源配分の最適化 重要科⽬目を重点的に勉強するスタイルへ ② 学習内容の最適化 学習段階に合わせて広く勉強するスタイルへ ③ 学習⽅方法の最適化 教科書を汚す勉強(演習中⼼心)へ ④ 動機付けの最適化 積極的な動機付けでの勉強へ Tomoaki Nishikawa, 2016 41

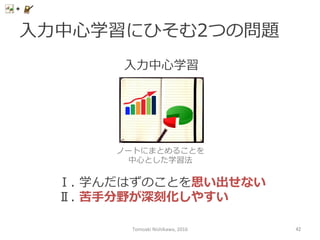

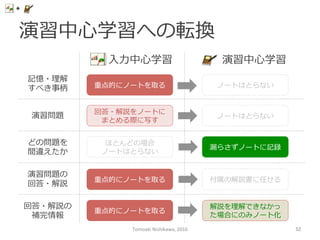

- 42. ⼊入⼒力力中⼼心学習にひそむ2つの問題 ⼊入⼒力力中⼼心学習 ノートにまとめることを 中⼼心とした学習法 Ⅰ. 学んだはずのことを思い出せない Ⅱ. 苦⼿手分野が深刻化しやすい Tomoaki Nishikawa, 2016 42

- 43. 問題が⽣生まれる仕組み 思い出せない 苦⼿手の深刻化 1. 記憶の定着には復復習が必要 なぜ? 3. 分かっていないことの気づき難さ なぜ? 2. ノートまとめのコストの⾼高さ 4. 垂直科⽬目の性質 Tomoaki Nishikawa, 2016 43

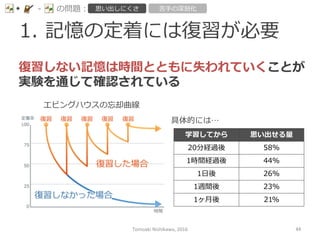

- 44. 1. 記憶の定着には復復習が必要 -‐‑‒ の問題: 思い出しにくさ 苦⼿手の深刻化 復復習しない記憶は時間とともに失われていくことが 実験を通じて確認されている エビングハウスの忘却曲線 具体的には… 学習してから 思い出せる量量 20分経過後 58% 1時間経過後 44% 1⽇日後 26% 1週間後 23% 1ヶ⽉月後 21% Tomoaki Nishikawa, 2016 44

- 45. 2. ノートまとめのコストの⾼高さ -‐‑‒ の問題: 思い出しにくさ 苦⼿手の深刻化 学習内容に 接触する どのように まとめるか 考える きれいに 筆記する ノートまとめのプロセス p 思考コスト 思考・時間・体⼒力力の全コストを⽤用いて 教科書や参考書を再作成する⾏行行為 ノート まとめ = p 時間コスト p 体⼒力力コスト プロが仕事として精魂込めた教科書や参考書は (ほとんどのノートより)すでによくまとまっている Tomoaki Nishikawa, 2016 45

- 46. 学習内容を思い出せないワケ -‐‑‒ の問題: 思い出しにくさ 苦⼿手の深刻化 復復習にかけるコストが不不⾜足しているから きれいなノートを作るコストが あるなら復復習に費やすべき Tomoaki Nishikawa, 2016 46

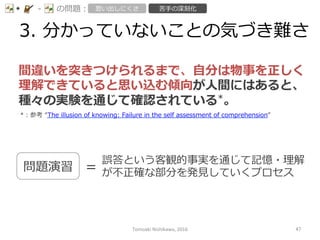

- 47. 3. 分かっていないことの気づき難さ -‐‑‒ の問題: 思い出しにくさ 苦⼿手の深刻化 間違いを突きつけられるまで、⾃自分は物事を正しく 理理解できていると思い込む傾向が⼈人間にはあると、 種々の実験を通じて確認されている*。 *:参考 “The illusion of knowing: Failure in the self assessment of comprehension” 問題演習 = 誤答という客観的事実を通じて記憶・理理解 が不不正確な部分を発⾒見見していくプロセス Tomoaki Nishikawa, 2016 47

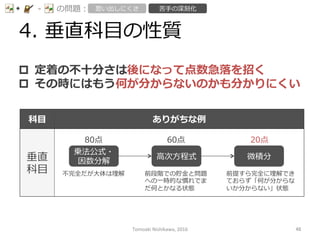

- 48. 4. 垂直科⽬目の性質 -‐‑‒ の問題: 思い出しにくさ 苦⼿手の深刻化 p 定着の不不⼗十分さは後になって点数急落落を招く p その時にはもう何が分からないのかも分かりにくい 科⽬目 ありがちな例例 垂直 科⽬目 乗法公式・ 因数分解 ⾼高次⽅方程式 微積分 80点 60点 20点 不不完全だが⼤大体は理理解 前段階での貯⾦金金と問題 への⼀一時的な慣れでま だ何とかなる状態 前提すら完全に理理解でき ておらず「何が分からな いか分からない」状態 Tomoaki Nishikawa, 2016 48

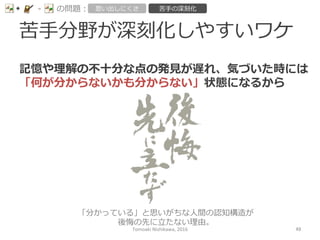

- 49. 苦⼿手分野が深刻化しやすいワケ -‐‑‒ の問題: 思い出しにくさ 苦⼿手の深刻化 記憶や理理解の不不⼗十分な点の発⾒見見が遅れ、気づいた時には 「何が分からないかも分からない」状態になるから 「分かっている」と思いがちな⼈人間の認知構造が 後悔の先に⽴立立たない理理由。 Tomoaki Nishikawa, 2016 49

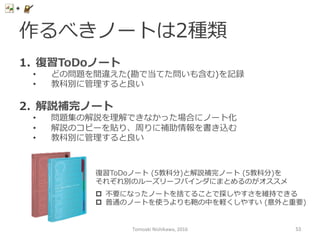

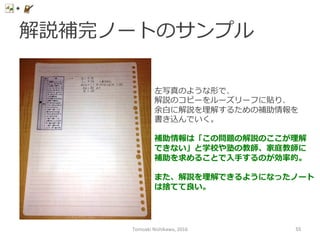

- 53. 作るべきノートは2種類 1. 復復習ToDoノート • どの問題を間違えた(勘で当てた問いも含む)を記録 • 教科別に管理理すると良良い 2. 解説補完ノート • 問題集の解説を理理解できなかった場合にノート化 • 解説のコピーを貼り、周りに補助情報を書き込む • 教科別に管理理すると良良い 復復習ToDoノート (5教科分)と解説補完ノート (5教科分)を それぞれ別のルーズリーフバインダにまとめるのがオススメ p 不不要になったノートを捨てることで探しやすさを維持できる p 普通のノートを使うよりも鞄の中を軽くしやすい (意外と重要) Tomoaki Nishikawa, 2016 53

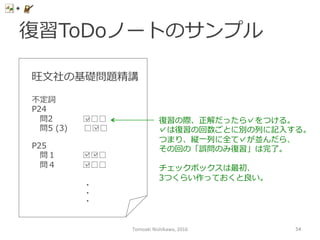

- 54. 復復習ToDoノートのサンプル 旺⽂文社の基礎問題精講 不不定詞 P24 問2 □□□ 問5 (3) □□□ P25 問1 □□□ 問4 □□□ ・・・ 復復習の際、正解だったら✓をつける。 ✓は復復習の回数ごとに別の列列に記⼊入する。 つまり、縦⼀一列列に全て✓が並んだら、 その回の「誤問のみ復復習」は完了了。 チェックボックスは最初、 3つくらい作っておくと良良い。 ✓ ✓ ✓ ✓ ✓ Tomoaki Nishikawa, 2016 54

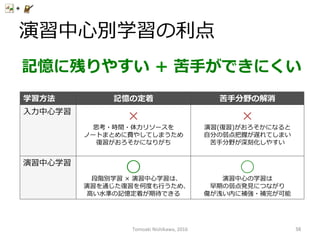

- 56. 演習中⼼心別学習の利利点 学習⽅方法 記憶の定着 苦⼿手分野の解消 ⼊入⼒力力中⼼心学習 × 思考・時間・体⼒力力リソースを ノートまとめに費やしてしまうため 復復習がおろそかになりがち × 演習(復復習)がおろそかになると ⾃自分の弱点把握が遅れてしまい 苦⼿手分野が深刻化しやすい 演習中⼼心学習 ◯ 段階別学習 × 演習中⼼心学習は、 演習を通じた復復習を何度度も⾏行行うため、 ⾼高い⽔水準の記憶定着が期待できる ◯ 演習中⼼心の学習は 早期の弱点発⾒見見につながり 傷が浅い内に補強・補完が可能 記憶に残りやすい + 苦⼿手ができにくい Tomoaki Nishikawa, 2016 56

- 57. 勉強効率率率最適化への道 数 英 国 社理理 国 社理理 数 英① 資源配分の最適化 重要科⽬目を重点的に勉強するスタイルへ ② 学習内容の最適化 学習段階に合わせて広く勉強するスタイルへ ③ 学習⽅方法の最適化 教科書を汚す勉強(演習中⼼心)へ ④ 動機付けの最適化 積極的な動機付けでの勉強へ Tomoaki Nishikawa, 2016 57

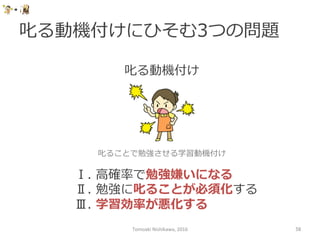

- 58. 叱る動機付けにひそむ3つの問題 Tomoaki Nishikawa, 2016 58 叱ることで勉強させる学習動機付け 叱る動機付け Ⅰ. ⾼高確率率率で勉強嫌いになる Ⅱ. 勉強に叱ることが必須化する Ⅲ. 学習効率率率が悪化する

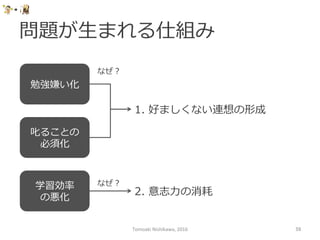

- 59. 問題が⽣生まれる仕組み 勉強嫌い化 学習効率率率 の悪化 1. 好ましくない連想の形成 なぜ? 2. 意志⼒力力の消耗 なぜ? Tomoaki Nishikawa, 2016 59 叱ることの 必須化

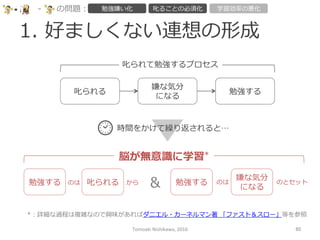

- 60. 1. 好ましくない連想の形成 Tomoaki Nishikawa, 2016 60 叱られる 嫌な気分 になる 勉強する 叱られて勉強するプロセス 時間をかけて繰り返されると… 叱られる勉強する のは から 勉強する 嫌な気分 になる のは のとセット & 脳が無意識識に学習* *:詳細な過程は複雑なので興味があればダニエル・カーネルマン著 「ファスト&スロー」等を参照 -‐‑‒ の問題: 勉強嫌い化 叱ることの必須化 学習効率率率の悪化

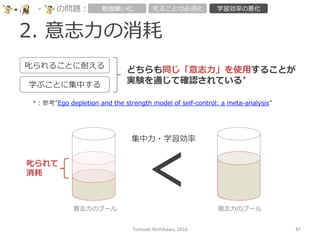

- 61. 2. 意志⼒力力の消耗 Tomoaki Nishikawa, 2016 61 -‐‑‒ の問題: 勉強嫌い化 叱ることの必須化 学習効率率率の悪化 叱られることに耐える 学ぶことに集中する どちらも同じ「意志⼒力力」を使⽤用することが 実験を通じて確認されている* *:参考”Ego depletion and the strength model of self-‐‑‒control: a meta-‐‑‒analysis” 叱られて 消耗 集中⼒力力・学習効率率率 < 意志⼒力力のプール 意志⼒力力のプール

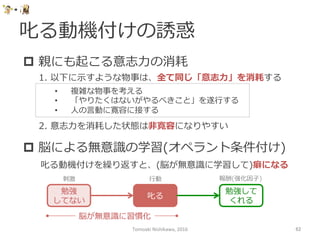

- 62. 叱る動機付けの誘惑 Tomoaki Nishikawa, 2016 62 p 親にも起こる意志⼒力力の消耗 • 複雑な物事を考える • 「やりたくはないがやるべきこと」を遂⾏行行する • ⼈人の⾔言動に寛容に接する 1. 以下に⽰示すような物事は、全て同じ「意志⼒力力」を消耗する 2. 意志⼒力力を消耗した状態は⾮非寛容になりやすい p 脳による無意識識の学習(オペラント条件付け) 叱る 勉強 してない 勉強して くれる 刺刺激 ⾏行行動 報酬(強化因⼦子) 叱る動機付けを繰り返すと、(脳が無意識識に学習して)癖になる 脳が無意識識に習慣化

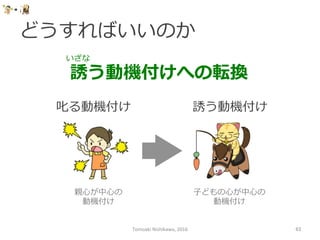

- 63. どうすればいいのか Tomoaki Nishikawa, 2016 63 誘う動機付けへの転換 いざな 誘う動機付け 叱る動機付け 親⼼心が中⼼心の 動機付け ⼦子どもの⼼心が中⼼心の 動機付け

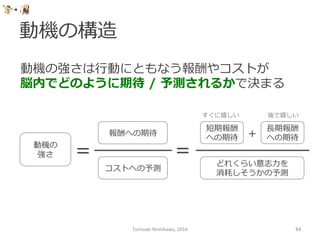

- 64. 動機の構造 Tomoaki Nishikawa, 2016 64 コストへの予測 報酬への期待 動機の 強さ = = 短期報酬 への期待 ⻑⾧長期報酬 への期待 どれくらい意志⼒力力を 消耗しそうかの予測 + 動機の強さは⾏行行動にともなう報酬やコストが 脳内でどのように期待 / 予測されるかで決まる すぐに嬉しい 後で嬉しい

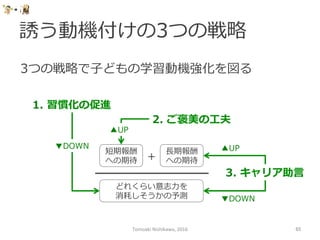

- 65. 誘う動機付けの3つの戦略略 Tomoaki Nishikawa, 2016 65 短期報酬 への期待 ⻑⾧長期報酬 への期待 どれくらい意志⼒力力を 消耗しそうかの予測 + 1. 習慣化の促進 2. ご褒美の⼯工夫 3. キャリア助⾔言 3つの戦略略で⼦子どもの学習動機強化を図る ▼DOWN ▼DOWN ▲UP ▲UP

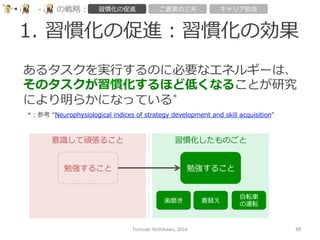

- 66. 1. 習慣化の促進:習慣化の効果 Tomoaki Nishikawa, 2016 66 -‐‑‒ の戦略略: 習慣化の促進 ご褒美の⼯工夫 キャリア助⾔言 あるタスクを実⾏行行するのに必要なエネルギーは、 そのタスクが習慣化するほど低くなることが研究 により明らかになっている* *:参考 “Neurophysiological indices of strategy development and skill acquisition” 意識識して頑張ること 習慣化したものごと 勉強すること 勉強すること ⻭歯磨き 着替え ⾃自転⾞車車 の運転

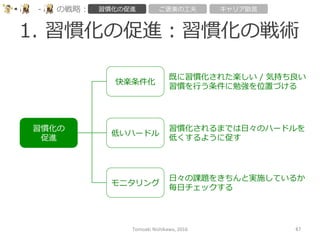

- 67. 1. 習慣化の促進:習慣化の戦術 Tomoaki Nishikawa, 2016 67 -‐‑‒ の戦略略: 習慣化の促進 ご褒美の⼯工夫 キャリア助⾔言 習慣化の 促進 快楽条件化 モニタリング 低いハードル 既に習慣化された楽しい / 気持ち良良い 習慣を⾏行行う条件に勉強を位置づける 習慣化されるまでは⽇日々のハードルを 低くするように促す ⽇日々の課題をきちんと実施しているか 毎⽇日チェックする

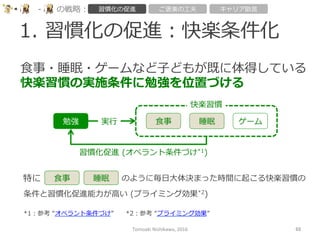

- 68. 1. 習慣化の促進:快楽条件化 Tomoaki Nishikawa, 2016 68 -‐‑‒ の戦略略: 習慣化の促進 ご褒美の⼯工夫 キャリア助⾔言 ⾷食事・睡眠・ゲームなど⼦子どもが既に体得している 快楽習慣の実施条件に勉強を位置づける ⾷食事 睡眠 ゲーム勉強 快楽習慣 実⾏行行 習慣化促進 (オペラント条件づけ*1) 特に ⾷食事 睡眠 のように毎⽇日⼤大体決まった時間に起こる快楽習慣の 条件と習慣化促進能⼒力力が⾼高い (プライミング効果*2) *1:参考 “オペラント条件づけ” *2:参考 “プライミング効果”

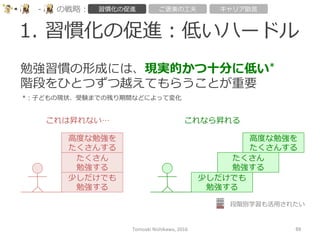

- 69. 1. 習慣化の促進:低いハードル Tomoaki Nishikawa, 2016 69 -‐‑‒ の戦略略: 習慣化の促進 ご褒美の⼯工夫 キャリア助⾔言 勉強習慣の形成には、現実的かつ⼗十分に低い* 階段をひとつずつ越えてもらうことが重要 少しだけでも 勉強する たくさん 勉強する ⾼高度度な勉強を たくさんする 少しだけでも 勉強する たくさん 勉強する ⾼高度度な勉強を たくさんする これは昇れない… これなら昇れる *:⼦子どもの現状、受験までの残り期間などによって変化 段階別学習も活⽤用されたい

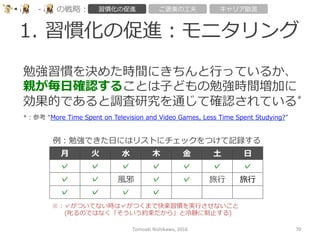

- 70. 1. 習慣化の促進:モニタリング Tomoaki Nishikawa, 2016 70 -‐‑‒ の戦略略: 習慣化の促進 ご褒美の⼯工夫 キャリア助⾔言 勉強習慣を決めた時間にきちんと⾏行行っているか、 親が毎⽇日確認することは⼦子どもの勉強時間増加に 効果的であると調査研究を通じて確認されている* *:参考 “More Time Spent on Television and Video Games, Less Time Spent Studying?” ⽉月 ⽕火 ⽔水 ⽊木 ⾦金金 ⼟土 ⽇日 ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ✓ ⾵風邪 ✓ ✓ 旅⾏行行 旅⾏行行 ✓ ✓ ✓ ✓ 例例:勉強できた⽇日にはリストにチェックをつけて記録する ※:✓がついてない時は✓がつくまで快楽習慣を実⾏行行させないこと (叱るのではなく「そういう約束だから」と冷冷静に制⽌止する)

- 71. 2. ご褒美の⼯工夫 Tomoaki Nishikawa, 2016 71 -‐‑‒ の戦略略: 習慣化の促進 ご褒美の⼯工夫 キャリア助⾔言 望ましい習慣へのご褒美は運をからめて与える⽅方が 習慣の継続を促す⼒力力が強い* *:参考 “オペラント条件づけ” 例例えば… など。 1. ⼀一週間毎⽇日勉強を実施できたら、サイコロを振らせてあげる 2. サイコロが1より⼤大きい数値なら「出た⽬目 × 100円」をあげる ソーシャルゲームのガチャは この仕組の応⽤用とみなせる

- 72. 3. キャリア助⾔言 Tomoaki Nishikawa, 2016 72 -‐‑‒ の戦略略: 習慣化の促進 ご褒美の⼯工夫 キャリア助⾔言 意義を実感できていない物事を無理理やり実⾏行行させる と意志⼒力力を消耗してしまう*。 *:参考”Ego depletion and the strength model of self-‐‑‒control: a meta-‐‑‒analysis” ⼦子ども⾃自⾝身の将来ヴィジョンの⽴立立場から、 親⼦子が対話を通じて「今勉強する意義」を ともに考えることは、⻑⾧長期的な報酬への期待 を形成するとともに、勉強への腰の重さを緩 和する効果も期待できる。 ※:ただし未来が遠くなるほど報酬への期待は弱くなる。 したがって、まだ⼦子どもが幼い場合(⼩小学⽣生など)、 この戦略略の効果はあまり期待できないだろう。

- 73. 誘う動機付けの利利点 Tomoaki Nishikawa, 2016 73 ⾃自ら進んで学ぶ習慣が作られやすい

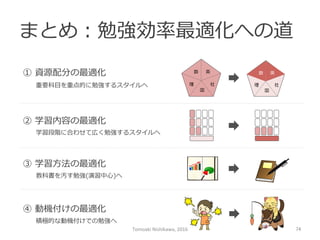

- 74. まとめ:勉強効率率率最適化への道 数 英 国 社理理 国 社理理 数 英① 資源配分の最適化 重要科⽬目を重点的に勉強するスタイルへ ② 学習内容の最適化 学習段階に合わせて広く勉強するスタイルへ ③ 学習⽅方法の最適化 教科書を汚す勉強(演習中⼼心)へ ④ 動機付けの最適化 積極的な動機付けでの勉強へ Tomoaki Nishikawa, 2016 74

- 76. 学ぶべきことは死ぬまで尽きない Tomoaki Nishikawa, 2016 76 Learn as if you will live forever. Live as if you will die tomorrow. 永遠に⽣生きるかのように学び、明⽇日死ぬかのように⽣生きよ。 [マハトマ・ガンジー] 受験勉強は単に「志望校へ⼊入学するのに必要な作業」と捉えることもできる。 しかし「⽬目的に向けて効率率率的かつ⾃自発的に学習する訓練」とも捉えられる。 ひとりの⼈人間に世界は広⼤大で、社会は複雑で、ゆえに学ぶべきことは尽きない。 ⼦子どもの受験勉強が単なる作業ではなく未来に活きる習慣の獲得過程となるこ とを願って⽌止まないし、このドキュメントが少しでもそれに寄与できたなら、 筆者としての幸甚これに勝るものはないと確信する。

- 77. Happy Studying! Tomoaki Nishikawa, 2016 77

![学ぶべきことは死ぬまで尽きない

Tomoaki

Nishikawa,

2016

76

Learn as if you will live forever.

Live as if you will die tomorrow.

永遠に⽣生きるかのように学び、明⽇日死ぬかのように⽣生きよ。

[マハトマ・ガンジー]

受験勉強は単に「志望校へ⼊入学するのに必要な作業」と捉えることもできる。

しかし「⽬目的に向けて効率率率的かつ⾃自発的に学習する訓練」とも捉えられる。

ひとりの⼈人間に世界は広⼤大で、社会は複雑で、ゆえに学ぶべきことは尽きない。

⼦子どもの受験勉強が単なる作業ではなく未来に活きる習慣の獲得過程となるこ

とを願って⽌止まないし、このドキュメントが少しでもそれに寄与できたなら、

筆者としての幸甚これに勝るものはないと確信する。](https://image.slidesharecdn.com/random-160715121524/85/-76-320.jpg)